AI that talks to itself — Key Takeaways

- AI systems using inner speech improve learning outcomes and task performance significantly.

- Self-directed internal dialogue boosts adaptability in AI models for new tasks.

- AI with enhanced working memory slots shows better performance on complex tasks.

- Training methods inspired by human cognitive processes can lead to more efficient AI learning.

- Interactivity during AI training is essential for maximum performance and flexibility.

What We Know So Far

AI and Inner Speech

AI that talks to itself — Recent research shows that AI systems perform better when they are trained to use inner speech alongside short-term memory. This allows them to process information more effectively, mirroring aspects of human cognition.

This method not only enhances performance but also lends a new dimension to how AI can learn from experiences, potentially leading to greater flexibility in dealing with unfamiliar challenges.

By implementing self-directed internal dialogue, AI models can significantly enhance their adaptability and learning capabilities. This approach may change the traditional methods of training AI. The ability of AI to engage in inner speech is likened to how humans solve problems and navigate their thoughts.

Key Details and Context

More Details from the Release

A blend of neuroscience and machine learning can uncover new insights about how AI learns.

Training AI to use inner dialogue improves its capacity for multitasking.

Models with multiple working memory slots perform better on complex tasks, suggesting memory structure influences AI learning.

AI systems that can talk to themselves show gains in flexibility and overall performance compared to those that do not.

Self-directed internal speech enhances the ability of AI models to learn and adapt to new tasks.

AI systems perform better when trained to use inner speech alongside short-term memory.

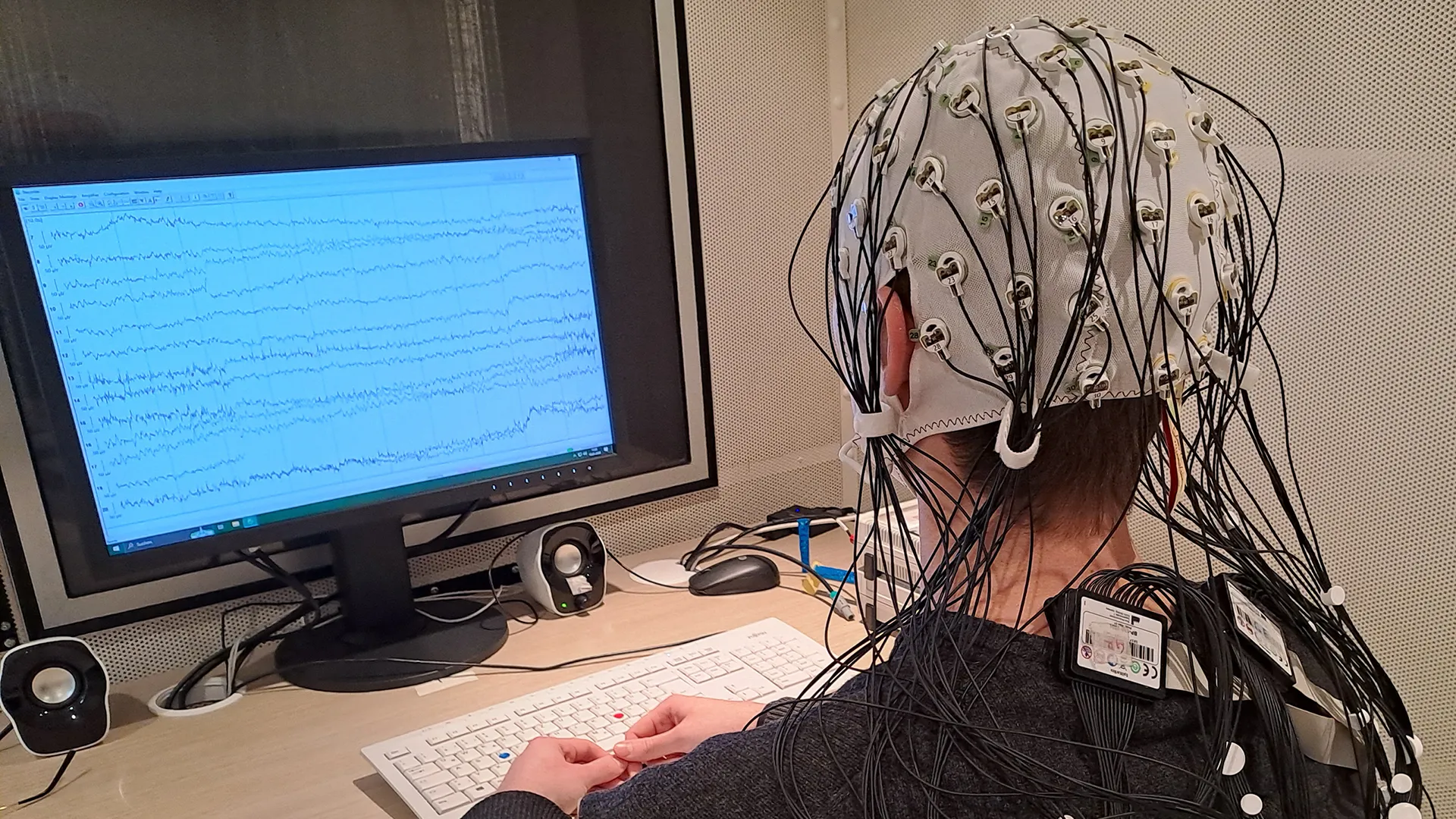

Research indicates that cognitive abilities in humans relate to the rhythm and synchronization of brain activity, which may inspire better AI learning. The nuanced approach of using inner speech can significantly alter the efficiency of AI training programs.

“How Self Talk Improves AI Performance To test this idea, the researchers combined self-directed internal speech, described as quiet”

The interaction dynamics during training are crucial for the performance of AI systems. These dynamics enable AI to reflect on actions and adjust learning trajectories.

A blend of neuroscience and machine learning can uncover new insights about how AI learns. Models designed with the lessons of human cognition in mind may pave the way for breakthroughs in efficiency and understanding.

Training AI to use inner dialogue improves its capacity for multitasking and strategic thinking.

Models with multiple working memory slots perform better on complex tasks, suggesting that memory structure influences AI learning. The architecture of this memory is integral to maximizing the potential of AI functions.

AI systems that can talk to themselves show gains in flexibility and overall performance compared to those that do not. Enhanced interaction not only equips AI with functional skills but also fosters innovative problem-solving approaches.

Mechanisms of Learning

AI systems that can communicate with themselves demonstrate gains in flexibility and overall performance compared to those that do not utilize inner speech. This paradigm shift suggests that mimicking human cognitive techniques can improve machine learning.

The structure of working memory within these AI models is crucial. Systems with multiple working memory slots have shown superior performance on complex tasks, indicating that memory architecture has a direct influence on their learning processes. Exploring these architectures can further the understanding of effective AI design.

What Happens Next

Future Directions in AI Learning

The interaction dynamics during training are essential for enhancing the performance of AI systems. Future research is expected to likely focus on developing training programs that incorporate more of these interactive elements. This focus is expected to open up opportunities for more effective learning strategies.

Furthermore, continued exploration into the blend of neuroscience and machine learning is expected to unlock new insights about how AI learns and adapts to real-world environments. This growing understanding is expected to likely influence future AI applications in various fields.

Why This Matters

Implications for AI Development

Understanding the mechanics of cognitive abilities in humans and how they relate to AI learning can lead to advancements that benefit multiple fields including robotics and automation. The transferability of these methods may augment efficiency and performance across diverse AI applications.

“Rapid task switching and solving unfamiliar problems is something we humans do easily every day. But for AI, it’s much more challenging,”

As AI becomes increasingly integrated into society, finding methods that mirror human learning is expected to not only improve machine tasks but also enhance human-AI collaboration. This collaboration is essential for fostering a seamless integration of AI technologies.

FAQ

What is inner speech in AI?

Inner speech in AI refers to the self-directed internal dialogue that aids in learning and adaptation.

How does talking to itself enhance AI learning?

It improves flexibility and overall performance, enabling better multitasking capabilities.