Qwen3.5 MoE Model — Key Takeaways

- The Qwen3.5 model uses a sparse Mixture-of-Experts architecture with 397 billion parameters for enhanced performance.

- Activating 17 billion parameters in a single forward pass makes Qwen3.5 capable of processing complex tasks efficiently.

- With the ability to handle up to 1 million tokens, Qwen3.5 excels in managing longer contexts and intricate AI operations.

- The model combines innovative Gated Delta Networks with the Mixture-of-Experts framework to boost processing effectiveness.

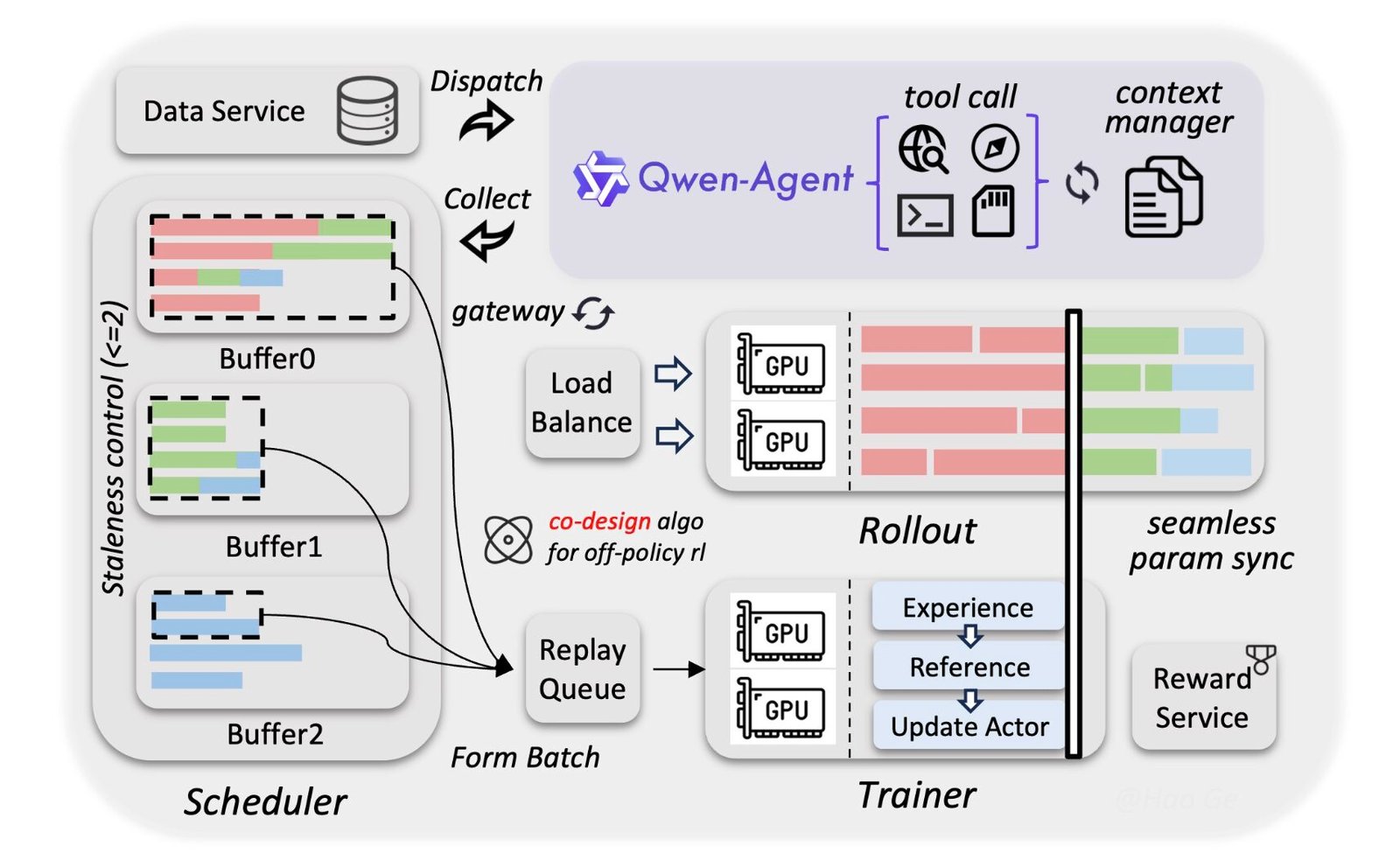

- Qwen3.5 achieved a significant score of 76.5 on the IFBench test, outperforming several existing proprietary models.

What We Know So Far

Release of Qwen3.5

Qwen3.5 MoE Model — The Alibaba Qwen Team has unveiled its latest advancement, the Qwen3.5 model, which boasts a staggering 397 billion total parameters. This model is structured as a sparse Mixture-of-Experts (MoE) system, setting a new standard in artificial intelligence capabilities.

Related image — Source: marktechpost.com — Original

The architecture of Qwen3.5 has been designed to allow efficient task execution. As mentioned, it activates 17 billion parameters in one single forward pass, which is a significant advancement as it ensures not just speed but also accuracy in the operations that require deep learning.

Furthermore, the ability to manage this level of complexity allows Qwen3.5 to stand out amongst its competitors. Efficiently tackling demanding AI tasks is essential for today’s professional applications—be they in the fields of healthcare, finance, or technology development.

During its operation, Qwen3.5 activates 17 billion parameters in a single forward pass, providing the capacity necessary for executing complex tasks efficiently. This architecture allows for a cutting-edge performance that distinguishes it from many existing models. Moreover, the design philosophy behind Qwen3.5 aims to empower developers and businesses to fully utilize AI’s potential.

Key Details and Context

More Details from the Release

The training of Qwen3.5 included trillions of multimodal tokens, which in turn improves its visual reasoning capabilities. This diverse training corpus ensures that the model can approach various tasks with the necessary proficiency.

Related image — Source: marktechpost.com — Original

Qwen3.5 is designed specifically for AI agents, which translates to its applicability across various use cases. This includes generating HTML/CSS code directly from UI screenshots, exemplifying its versatility and relevance in today’s digital workspace.

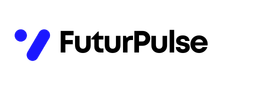

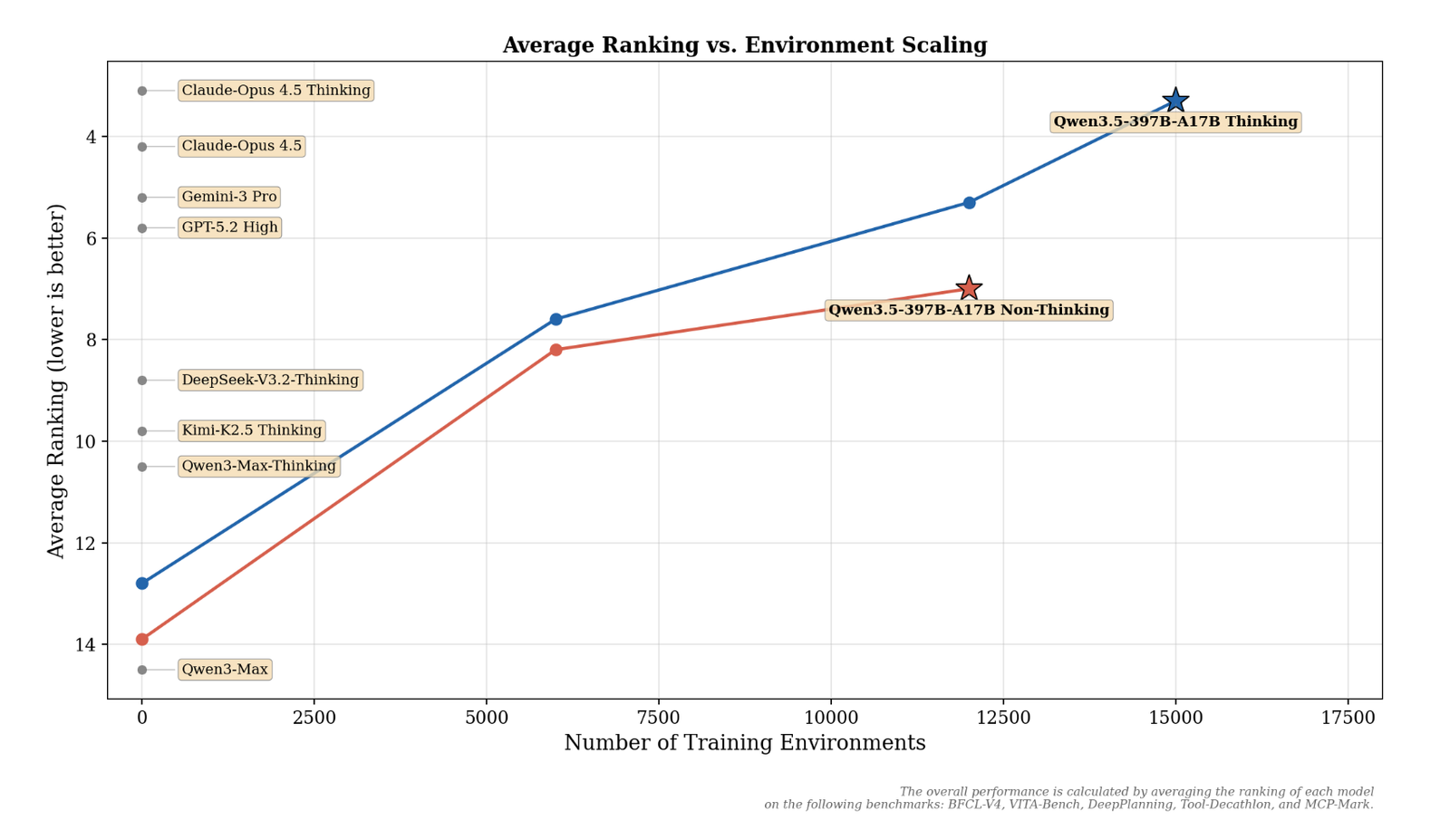

In addition to these features, the Qwen3.5 model incorporates an asynchronous Reinforcement Learning framework. This advanced architecture plays a crucial role in maintaining the model’s accuracy, even when processing lengthy documents that require consistent performance over long periods.

The commitment to performance is underscored by Qwen3.5’s achievement of a 76.5 score on the IFBench test, an indication that it surpasses many proprietary models currently on the market.

The synergy between Gated Delta Networks and the Mixture-of-Experts architecture highlights the forward-thinking approach in the design of Qwen3.5, focusing on enhanced performance and efficiency. The boost in processing capabilities makes Qwen3.5 an unforgettable player in the AI field.

Moreover, Qwen3.5’s capacity to process up to 1 million tokens marks it as a powerhouse for managing complex AI tasks that other models may struggle with. This aspect is vital for applications requiring not only intelligence but also the capability to understand and analyze large datasets.

Advanced Processing Capabilities

One of the standout features of the Qwen3.5 model is its ability to process up to 1 million tokens. This impressive capability means that it can manage long-term contexts and handle intricate AI operations with ease, giving it a critical edge in various applications from conversational agents to data analysis tools.

The implementation of Gated Delta Networks alongside the MoE architecture significantly enhances its processing effectiveness. The model is capable of rapid data handling and accurate contextual understanding, thus setting a new benchmark for AI development.

What Happens Next

Future Implications for AI

The Qwen3.5 model is particularly designed for AI agents, which indicates a shift towards more intelligent automation in various applications. Specific tasks can include generating HTML/CSS code from user interface screenshots, showcasing its versatility.

Related image — Source: marktechpost.com — Original

With a notable score of 76.5 on the IFBench test, Qwen3.5 showcases its superiority over various proprietary models, hinting at its role in leading the next generation of AI technology. The ability to learn from extensive datasets and provide actionable insights may ultimately facilitate a broader range of applications.

Why This Matters

Impact on AI Development

Alibaba’s introduction of the Qwen3.5 model exemplifies a significant step forward in machine learning advancements. Its architecture and functionalities are paving the way for more robust AI agents capable of tackling increasingly complex tasks.

The model’s foundation on Gated Delta Networks and the capacity to process expansive datasets fosters a promising future for AI. These advancements is expected to have far-reaching implications, influencing not just technical developments but also shifts in how businesses and industries operate.

In summary, the Qwen3.5 model positions Alibaba at the forefront of AI technology, cementing its role in driving innovation and facilitating more intelligent, adaptable applications across various sectors.

FAQ

Frequently Asked Questions

In light of the Qwen3.5 release, here are some commonly raised queries:

What is the Qwen3.5 model?

Qwen3.5 is a Mixture-of-Experts AI model developed by Alibaba with 397 billion parameters.

What capabilities does Qwen3.5 offer?

It can process up to 1 million tokens, enabling it to perform complex AI tasks effectively.

How did Qwen3.5 perform in testing?

It scored 76.5 on the IFBench test, surpassing many proprietary AI models.