Key Takeaways

- AI coding assistants like GPT-5 show a marked decline in quality, generating bugs and slow outputs.

- New models often produce silent failures, making debugging challenging for developers.

- Inexperienced users have contributed to a decline in training data quality for coding AIs.

- Older models like GPT-4 still outperform the latest in handling coding errors effectively.

- Vibe coding may speed up development but can overlook essential coding practices, leading to errors.

What We Know So Far

AI coding assistants decline — Recent discussions surrounding AI coding assistants indicate a worrying trend: the quality of these tools has reached a plateau, and in some instances, has begun to decline. Evidence shows that tasks which once took five hours with assistance are now consuming up to eight hours due to inefficiencies in the new models. This decline raises significant questions for developers reliant on AI to streamline their coding processes.

Related image — Source: spectrum.ieee.org — Original

Newer models, such as GPT-5, have come under scrutiny for generating code that fails silently. This means that errors may go unnoticed until they cause significant disruptions, complicating the debugging process.

Evidence of Decline

An analysis highlighted that older models like GPT-4 provided more useful outputs when addressing coding errors. Developers who engaged with these models reported a smoother workflow, indicating that the evolution toward newer models isn’t necessarily an improvement.

Moreover, the influx of inexperienced users has exacerbated the problem, as their interactions contribute to poorer training data quality. This decline in input data does not bode well for future AI advancements, making it crucial for developers to tread carefully.

Key Details and Context

More Details from the Release

The surge of inexperienced users negatively impacted the quality of training data for coding assistants.

Older models like GPT-4 provided more useful outputs when confronted with coding errors compared to newer models.

Newer models like GPT-5 often generate code that fails silently, making errors difficult to detect.

Tasks that previously took five hours with AI assistance are now taking longer, sometimes up to eight hours.

AI coding assistants have reached a quality plateau, and recent models are in decline.

The shift toward prioritizing short-term gains over quality solutions is evident in contemporary AI coding assistants. While tools may enhance productivity by enabling rapid prototyping, they still come loaded with bugs. Developers now find themselves navigating an environment where speed might yield errors that negate the time saved.

Related image — Source: spectrum.ieee.org — Original

“give in to the vibes, embrace exponentials, and forget that the code even exists.”

The concept of “vibe coding,” a practice aimed at speeding up development, can gloss over critical coding practices. As one expert suggests:

Impact of the Developer Community

Inexperienced users stepping into the arena of AI coding are not just consuming content—they’re also contributing to it. Their interactions with coding models can dilute the training data quality, yielding outputs that lack robustness. Developers are faced with the challenge of being able to discern useful outputs from potentially misleading ones.

Interestingly, while the collaboration of inexperienced users has introduced noise into the training datasets, it also opened up discussions about how to better integrate novices into productive coding environments.

What Happens Next

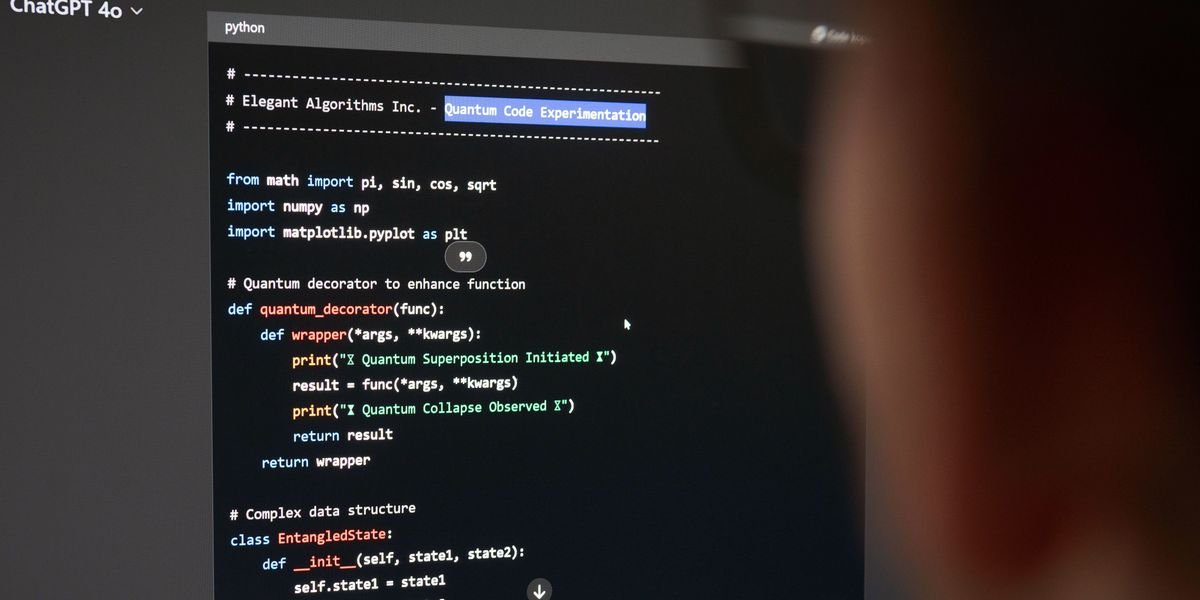

The path ahead for AI coding assistants necessitates a careful evaluation of the designs and training data used in developing these tools. It is expected to be essential for tech firms to address the silent failures observed in new models, recognizing that user experience with these tools plays a crucial role in their effectiveness.

Related image — Source: spectrum.ieee.org — Original

Additionally, rectifying the decline in code quality must become a priority. Developers may need to lean on older, more reliable models for specific tasks until the newer systems can catch up.

Potential Solutions

One possible solution could involve enhancing AI models with better training sets that represent a wider range of professional coding standards. Ensuring that users, particularly newbies, have access to foundational coding knowledge can also assist in enriching interaction outcomes.

Integrating feedback loops from experienced developers could help fine-tune AI outputs, making them more reliable and productive in the future.

Why This Matters

The ramifications of the decline in AI coding assistance are profound. As coding becomes increasingly crucial in various sectors, ensuring that tools effectively support developers is more critical than ever. The current trends suggest a need for a recalibration in AI development that places quality above merely racing towards innovation.

“I remember that [the original app] took me almost a month, because I had to study a lot of things I didn’t understand,”

With developers’ productivity at stake, it deviates focus away from valuable skills and practices that nurture mastery over code. The tech industry must respond thoughtfully, balancing automation with the essential art of coding.

FAQ

What issues arise from using the latest AI coding models?

Newer models often lead to silent failures, making it difficult to detect and fix errors.