Key Takeaways

- Amazon Nova LLM-as-a-Judge uses a multi-step training process for AI evaluation.

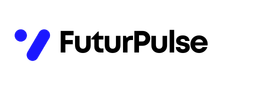

- It achieves only a 3% aggregate bias in comparisons with human annotations.

- The evaluation framework supports detailed metrics, including win rates and confidence intervals.

- Nova outperforms Meta’s J1 8B on key judge benchmarks like JudgeBench.

- The setup process for Nova includes preparing datasets and configuring evaluation settings efficiently.

What We Know So Far

Overview of Amazon Nova

Amazon Nova LLM-as-a-Judge is a state-of-the-art AI model designed to evaluate the outputs of generative AI systems. Leveraging a comprehensive, multi-step training process, it provides a robust framework for assessing AI-generated content.

Recent evaluations indicate that Nova has achieved an impressive 3% aggregate bias when compared to human annotations, establishing it as a reliable tool for AI evaluation.

Training Methodology

The model is constructed using supervised training and reinforcement learning techniques applied to publicly sourced annotated datasets. This ensures that the model is well-equipped to understand context and evaluate the quality of AI outputs.

By training on diverse datasets, Nova enhances its ability to render judgments on a variety of generative AI scenarios.

Key Details and Context

More Details from the Release

The process for implementing Nova LLM-as-a-Judge on SageMaker includes preparing datasets and configuring evaluation settings.

The evaluation framework allows for the assessment of model outputs across numerous categories including creativity and real-world knowledge.

Amazon Nova LLM-as-a-Judge performs better than Meta’s J1 8B on key judge benchmarks like JudgeBench and PPE.

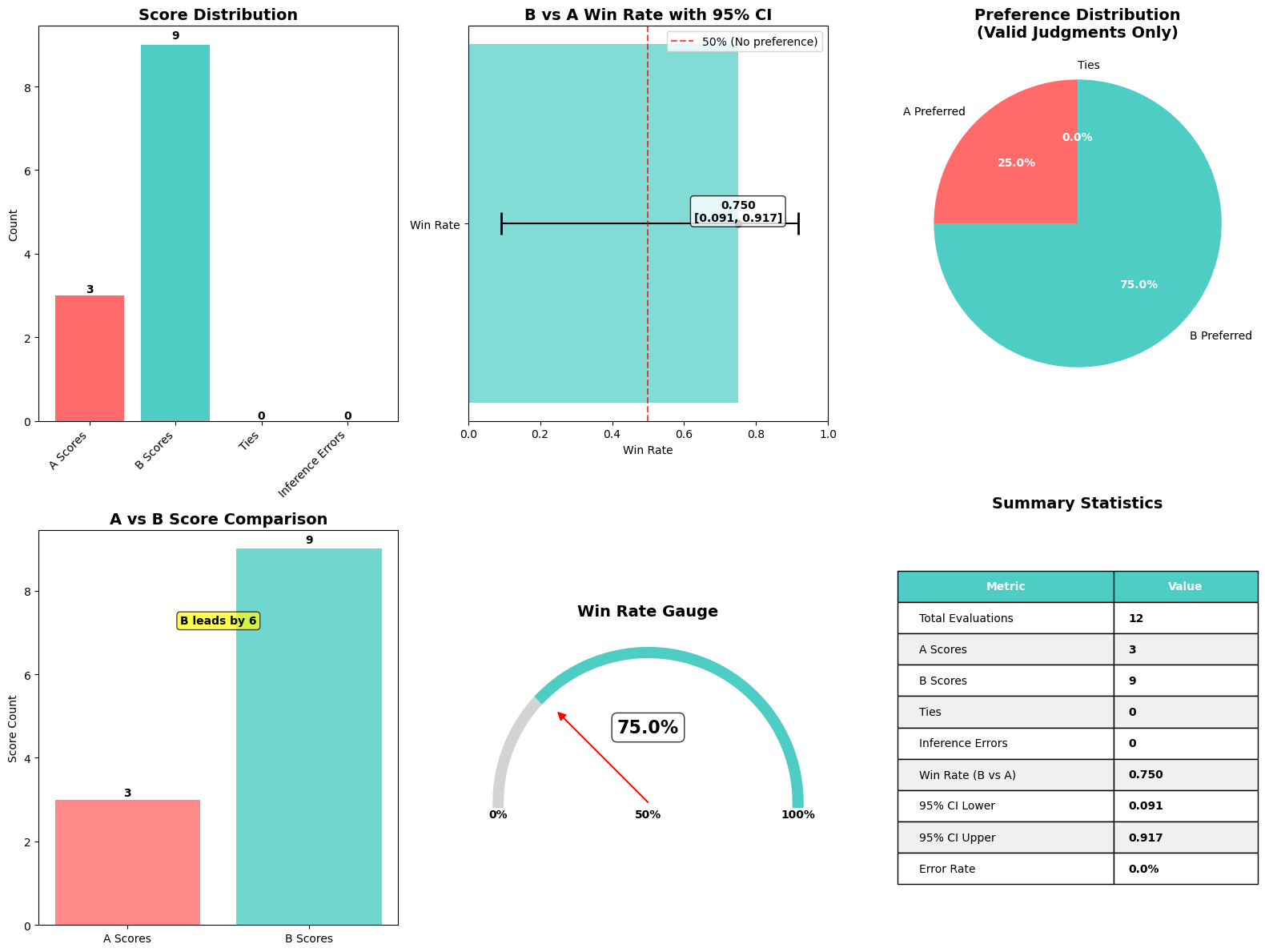

Metrics produced by Amazon Nova LLM-as-a-Judge include win rates and confidence intervals for model comparisons.

The evaluation process allows for pairwise comparisons between model iterations, facilitating data-driven decisions.

Amazon Nova LLM-as-a-Judge achieves only a 3% aggregate bias in evaluations compared to human annotations.

The Nova LLM-as-a-Judge capability was built using supervised training and reinforcement learning on publicly annotated datasets.

Amazon Nova LLM-as-a-Judge evaluates generative AI outputs using a comprehensive, multi-step training process.

Evaluation Process

The evaluation process facilitated by Amazon Nova enables pairwise comparison of model iterations. This means models can be directly evaluated against one another, allowing for data-driven decision making.

Metrics produced during evaluations include win rates and confidence intervals, making it easier for developers and researchers to assess model performance quantitatively.

Performance Benchmarking

Results show that Amazon Nova LLM-as-a-Judge not only meets but exceeds the performance benchmarks set by competing models, including Meta’s J1 8B on critical evaluation metrics like JudgeBench and PPE.

This high level of reliability positions Nova as a leading choice for evaluation in the rapidly evolving field of generative AI.

What Happens Next

Implementation on SageMaker

Implementing Nova LLM-as-a-Judge on Amazon SageMaker involves a streamlined process of preparing datasets and configuring evaluation settings. This allows users to take full advantage of Amazon’s powerful cloud infrastructure to evaluate their models effectively.

The integration of Nova into existing workflows is expected to enhance the capabilities of researchers and developers as they strive to refine their generative AI models.

Future Prospects

As generative AI continues to evolve, tools like Amazon Nova LLM-as-a-Judge is expected to play a pivotal role. By providing unbiased and detailed evaluations, Nova helps ensure that AI development is steered towards more accurate and useful outputs.

With the ongoing improvements in AI technologies, future iterations of Nova may bring even more sophisticated features and enhancements to the evaluation process.

Why This Matters

Implications for AI Development

Using Amazon Nova for AI evaluation significantly impacts the landscape of machine learning. The low bias and clear metrics help create a more transparent evaluation environment, allowing developers to make informed adjustments to their models.

This transparency is especially crucial in areas where AI models are used to make significant decisions, as ensuring fairness and accuracy remains a top priority.

Broader Impact on the Industry

As industries increasingly rely on AI technologies, the ability to rigorously evaluate these models is expected to define success. Amazon Nova serves as a benchmark, guiding the application of generative AI in practical settings.

These developments indicate a future where AI systems can be trusted not only by their developers but also by the communities and sectors that integrate them into their operations.

FAQ

Common Questions

Here are some frequently asked questions about Amazon Nova LLM-as-a-Judge:

What is Amazon Nova LLM-as-a-Judge?

Amazon Nova LLM-as-a-Judge is an AI model that evaluates generative AI outputs using advanced metrics.

How does Nova achieve low bias?

Nova achieves low bias through supervised training and reinforcement learning on curated datasets.

What metrics does Nova provide?

Nova provides metrics such as win rates and confidence intervals for model comparisons.

How does the evaluation process work?

The process facilitates pairwise comparisons between model iterations for data-driven decision making.